Archive

SQL Server Denali – Sequences

Another new programmability feature added in the Denali CTP is ‘Sequences’ – a concept very familiar to those of us who already deal with Oracle, but an unusual addition for SQL Server and one that makes me scratch my head thinking – why? We already have the identity column feature available to us within SQL Server but not available within Oracle, thus the need for sequences in Oracle. When using any identity / numeric key / FK mechanism it is important that the actual value for this identity has no actual relation to the data it represents other than an arbitrary number representing the row, if anything it would be a bad design to rely on the identity value be in sequence, or contiguous in any way. In SQL Server it is not guaranteed to be contiguous at all – transaction rollback or a value other than 1 for the increment for example will prevent it.

Sequences are primarily for when you wish to know a number in advance of using it, or perhaps you wish to use the same number for a number of records spread across tables (and thus relate them using that number.)

Looking at the syntax you can see that the SQL Server and Oracle Syntax are very similar and share the same keywords.

SQL Server Syntax:

CREATE SEQUENCE [schema_name . ] sequence_name [ [ ,…n ] ] [ ; ]

::= {[ AS { built_in_integer_type | user-defined_integer_type}]

START WITH

INCREMENT BY

{ MINVALUE | NO MINVALUE }

{ MAXVALUE | NO MAXVALUE }

{ CYCLE | NO CYCLE }

{ CACHE [ ] | NO CACHE } }

Oracle Syntax:

CREATE SEQUENCE INCREMENT BY START WITH MAXVALUE / NOMAXVALUE MINVALUE / NOMINVALUE CYCLE / NOCYCLE CACHE <#> / NOCACHE ORDER / NOORDER

Most of the keywords are self-explanatory, and from a comparison of syntax you can see that SQL Server and Oracle are pretty similar in terms of the syntax.

Most of the keywords are pretty self-explanatory, the one that makes me cringe the most is CYCLE – It’s bad enough using a sequence number instead of an identity, but even worse when you consider that it may not be unique. The advice there is to create an additional unique index on the field to prevent an insertion / updated from taking a duplicate – but that seems like a bit of a ‘fudge’, and instead of solving the real problem, works around it.

To add to the weirdness of the construct, you can even ask for a sequence based on an OVER clause, using the adventure works database as an example I created a sequence:

create sequence testSequence as integer start with 1 increment by 1 minvalue 1 maxvalue 10000

And then used it within a select statement as follows:

select next value for testSequence over (order by Name asc) as id, Name from Production.Product

The results come back:

1 Adjustable Race 2 All-Purpose Bike Stand 3 AWC Logo Cap 4 BB Ball Bearing ...

In case you were thinking that was relatively useful, when you re-run the command, you of course are returned a different set of numbers, as the sequence does not restart, making this one of the weirdest features I have seen.

505 Adjustable Race 506 All-Purpose Bike Stand 507 AWC Logo Cap 508 BB Ball Bearing ...

If you attempt to place the order on the outside in the following manner, SQL Server will just throw an error.

select next value for testSequence as id, Name from Production.Product order by Name asc Msg 11723, Level 15, State 1, Line 1 NEXT VALUE FOR function cannot be used directly in a statement that contains an ORDER BY clause unless the OVER clause is specified.

And to round off the errors you can expect to see when using this, when you run the sequence out of values, you will get:

Msg 11728, Level 16, State 1, Line 1 The sequence object 'testSequence' has reached its minimum or maximum value. Restart the sequence object to allow new values to be generated.

Try create a sequence based on a numeric or decimal with some scale, such as numeric(6,2):

Msg 11702, Level 16, State 2, Line 1 The sequence object 'testSequence' must be of data type int, bigint, smallint, tinyint, or decimal or numeric with a scale of 0, or any user-defined data type that is based on one of the above integer data types.

Or if you fail to get your starting value within the min and max boundaries you are setting:

Msg 11703, Level 16, State 1, Line 1 The start value for sequence object 'testSequence' must be between the minimum and maximum value of the sequence object.

Overall sequences remain a bit of a niche feature for me in SQL Server, I just can not see any normal everyday activity needing to use them, although it would make porting of applications between Oracle and SQL Server a bit easier since they will both be able to use them.

In that kind of situation though I would still prefer the GUID mechanisms that we have available to us. They have the same benefits of being able to know a record ID in advance of using it as well as the ability to be stored in either database. It also has the added advantage of being able to be created whilst offline from the database, something a sequence can not do.

Which User Made That Change?

If you have spent any time tinkering about in the transaction log, you will of already come across a bit of a problem when trying to decide what was done and by whom – the ‘what part’ I have decoded in a few posts, but the ‘whom’ part is a lot harder. As far as I can tell the log only contains the SPID of the user who opened the transaction, and does not give us any indication as to who that user really was.

From an actual investigative perspective this is a bit of a painful exercise, I can see a row was deleted but to find out who / what did that actual deletion I would have to start examining either the SQL Server logs or the Windows Server Logs. The default behaviour of SQL Server security though is to only log failed login attempts so the successful ones will not show up by default – to get those appearing you need to change your SQL Server security settings. You can access these logs from the SQL management studio using either the xp_readerrorlogs or sp_readerrorlogs procedures although the nature of the log and textural values make it difficult to then combine in a set based manner – I can humanly read the values but machine reading them for any purpose is a bit of a pain – there is also the issue that those logs will be cycled – and the old logs could well be completely offline.

So I would prefer an easier solution, keeping a record of the logins within the database regardless of the SQL Server security settings, and being in a form that allows me to use a bit more of a set based solution against it. To start with, we will need a table to store the information available to us during the logon process:

create table master.dbo.spidArchive ( LoginTime datetime2(7) ,SPID integer ,ServerName nvarchar(100) ,LoginName nvarchar(100) ,LoginType nvarchar(100) ,LoginSID nvarchar(100) ,ClientHost nvarchar(100) ,IsPooled tinyint )

The spidArchive table here is created in the master database so that it can cover the connections for any of the databases. You can see we have access to a lot of useful information, not just who executed the command, but from which machine they logged in from. The next step is to get SQL Server to add a row to the table every time a login occurs – from SQL Server 2005 onwards we have had access to DDL triggers as well as DML triggers and have the ability to intercept a number of non-DML events.

create trigger spidLogin on all server after logon as declare @eventdata xml; set @eventdata = EVENTDATA(); INSERT INTO master.dbo.spidArchive ( LoginTime ,SPID ,ServerName ,LoginName ,LoginType ,LoginSID ,ClientHost ,IsPooled ) VALUES ( @eventdata.value('(/EVENT_INSTANCE/PostTime)[1]','datetime2(7)') ,@eventdata.value('(/EVENT_INSTANCE/SPID)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/ServerName)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/LoginName)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/LoginType)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/SID)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/ClientHost)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/IsPooled)[1]','tinyint') )

During the login process, the EventData() function returns a fixed format XML fragment from which we can extract the values we seek and simply insert into our spidArchive table. Now we have a log being taken of all connections being established to the server, we can start using this to translate from a SPID to a user, even when the user is no longer connected – as long as we know the SPID and the time, we just need to look for the closest entry in the past for that SPID, and that will indicate which user was currently logged on at the time. This function should go in the master database again.

CREATE FUNCTION dbo.ConvertSpidToName(@SPID integer, @Date datetime2(7)) RETURNS nvarchar(100) AS BEGIN DECLARE @name nvarchar(100) SELECT TOP(1) @name = LoginName FROM master.dbo.spidArchive WHERE SPID = @SPID AND LoginTime <= @Date ORDER BY LoginTime DESC; RETURN @name; END

This function just performs the logic stated above and converts the SPID and DateTime into the login name for the user. Once this infrastructure is in place we can now directly use that in a call to ::fn_dblog(null,null) to translate the SPID column

select master.dbo.ConvertSpidToName(log.SPID, log.[Begin Time]) as UserName, log.* from ::fn_dblog(null,null) log

What you will notice is that for the majority of log lines, there is no user name displayed – this is because the SPID is only recorded against the LOP_BEGIN_XACT entry, the beginning of the transaction. This doesn’t really present a problem, from previous experiments we know all the entries for an individual transaction are given a unique Tansaction ID which we can use to group them together. It becomes pretty trivial to join back to the log, and connect any transaction entries to the LOP_BEGIN_XACT record and produce the name on every row possible.

select master.dbo.ConvertSpidToName(log2.SPID, log2.[Begin Time]) as UserName, log.* from ::fn_dblog(null,null) log left join ::fn_dblog(null,null) log2 on log.[Transaction ID] = log2.[Transaction ID] and log2.Operation = 'LOP_BEGIN_XACT'

So overall it is not too hard to get the log entries attributed to the accounts that generated them.

A couple of final notes / caveats:

- If your application is using a trusted sub-system approach this of course will not work as a technique, since all the users will be logged into the application through an internal mechanism (such as a users table) and then the application service connects using it’s own credentials – always a good thing since then the user’s have no direct access to the database. In that kind of situation this is of no value, every connection will be shown up as the same user/ source.

- Within my code I chose to use datetime2(7), to be as accurate as possible on the connections and timings, you could drop to just datetime for SQL Server 2005 but with only 1/300ths of a second accuracy there is a chance on a very busy server that you could see two entries for a single SPID at the same datetime – which would pose a bit of a problem.

- The spidArchive table can not be allowed to grow unconstrained – I have not included anything here for clearing down the table, but it is not difficult to conceive of it being archived off, or cleaned up weekly via a SQL Agent job.

SQL Server Denali – Paging

The introduction of paging within SQL Server Denali will have made a significant number of developers happy, all of which will of previously created home-baked solutions to the same problem. All the solutions have the same underlying problem – paging is by its nature is inefficient. Most solutions use the row number analytic function, and then sub-select data from that. For a large dataset that presents a problem – the data has to be fully scanned, sorted and allocated row numbers. A suitable index can eliminate the sort operator, but you still end up scanning the entire index to allocate the row numbers.

In Denali, we can see that they have added support to the Order By clause, to include a starting offsets and syntax to denote how many rows we wish to return. The syntax can be examined on MSDN (http://msdn.microsoft.com/en-us/library/ms188385%28v=SQL.110%29.aspx#Offset) and in brief is:

ORDER BY order_by_expression

[ COLLATE collation_name ]

[ ASC | DESC ]

[ ,...n ]

[ <offset_fetch> ]

<offset_fetch> ::=

{

OFFSET { integer_constant | offset_row_count_expression } { ROW | ROWS }

[

FETCH { FIRST | NEXT } {integer_constant | fetch_row_count_expression }

{ ROW | ROWS } ONLY

]

}

Seeing this new syntax, made me want to try it out and see how the query plans are affected. I am using the trusty Adventure Works as usual – a version for Denali has been put on codeplex, so one quick download later and I was ready to test the new syntax. (Adventure Works download : http://msftdbprodsamples.codeplex.com/releases/view/55330 )

For my tests, I used the production.product table, and wished to page the products based on their name. There is a non-clustered index on the Name field of the product table as well as a clustered index on the product_id, so what would the query plan give?

select * from Production.Product order by name asc offset 10 rows fetch first 10 rows only

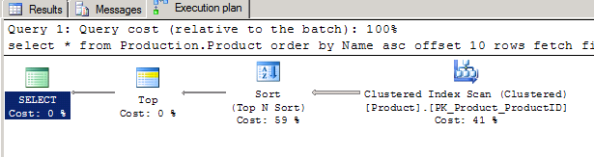

And the query plan is not very surprising

So even with a new syntax the underlying problem remains, the nature of paging is that you are scanning the data, with statistics io turned on the stats come back with Table ‘Product’. Scan count 1, logical reads 15 etc. not particularly exciting and what we would expect given the table is contained within 15 pages. It was because of the stats though that I noticed an anomaly, in one of the tests, I had dropped to returning only a single row from the table as follows:

select * from Production.Product order by name asc offset 10 rows fetch first 1 rows only

What I noticed was that the statistics changed to Table ‘Product’. Scan count 1, logical reads 24 – the entire table is contained within 15 pages, so how could it jump to reading 24?

A quick check of the query plan showed what has changed, the engine decided that it was cheaper to use the Name index, which for the purposes of the ordering was narrower and therefore more efficient, and then join back to the main table via the clustered key. Understandable, although the additional pages read is unlikely to make this more efficient, but I doubt you would see much real world difference. An oddity, but nothing really significant in it.

This triggered a more interesting thought, what happens if we reduce our fields so that the index is considered a covering index? is SQL going to get smart when making a selection – so far we have only seen full table scans occurring.

select Name, ProductID from Production.Product order by name asc offset 20 rows fetch first 10 rows only

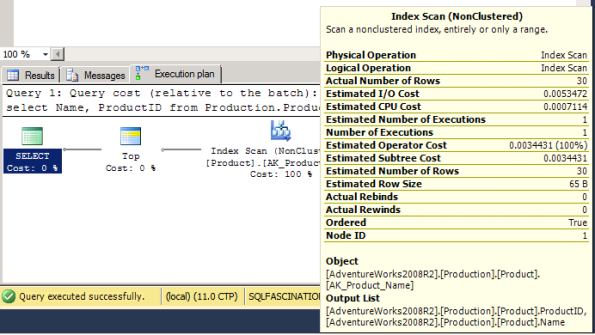

The query is now being covered by the name index since the non-clustered index includes the clustered key (ProductID) – and this changes the query plan again, although its pretty subtle change to notice.

The expected index scan appears, but if you look closely at the tooltip for the scan, the number of rows being read in the scan is not the total number of rows in the index, but a product of the offset + the number of rows requested. This was also reflected within the statistics, showing only 2 logical reads – the index uses 6 pages in total. As I changed the number of rows to offset / return the Actual number of rows read changed accordingly. o with a covering index in place, the query engine gets a bit more efficient and does a forward scan of the index until the point at which we have passed a sufficient number of rows. This sounds good – we have avoided scanning the whole index to provide the paged results in a slightly more efficient manner.

Except those with a quick mind will realise that the performance degrades as you go further and further down the list, requesting the 490-500th products will results in 500 rows being checked, not 30. By putting in a covering index we have sacrificed consistency on query times to gain some potential performance – the full scans solutions will broadly speaking take the same time regardless of which 10 rows you might be requesting, since it has to scan, sort, allocate numbers and then sub-select.

As features go, I like the paging – it removes the need for all the different homegrown solutions that are out there, but the performance of it remains a problem – this is no silver bullet to paging performance problems that people have.

SQL Internals Viewer

As Captain Oates once said, ‘I am just going outside and may be some time’ – feels like quite a while since I had to time to see down and write something.

I had a bit of time to take a look at the SQL Internals Viewer (http://internalsviewer.codeplex.com/) , it has been out for some time but I had never downloaded it to play around to see how useful it is in terms of a way of learning more about the internals.

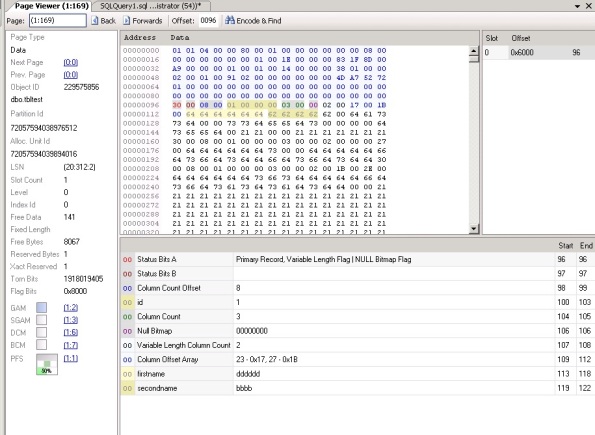

The Page Viewer is excellent, the breakdown of a page into the component parts for a row and the display of the page data is a superb aid to anyone wanting to understand how the data is stored on a page. Whilst you can use DBCC PAGE to get at all this information, presenting it in such a readable form will satisfy most people easily.

The page allocation map is a nice little addition, but really is just an extension of showing you what pages belong to which object etc.

The transaction log viewer though I was really looking forward to seeing, primarily to help me decode more transactions, but it has been a bit disappointing – the level of detail shown is very limited, and provides no real benefit over just looking at the log directly, or using the last transaction log trick I have previously posted.

As you can see from the screenshot, the level of details is pretty light for a simple transaction, no actual breakdown of the log record itself is provided, which is a shame – whilst it does given you some basic information and will help some people, I think if you are at the stage where you are taking an interest in the transaction log, you are already beyond this point.

So as an educational / learning aid, it is pretty good on the page internals side – and anyone wanting an easier way to visualize that for learning it is still worth grabbing. I would love to see more on the Log side – but at present the project appears to be in hibernation, with no changes in some considerable time, so I suspect we will not see any enhancements now.

Interval Partitioning in SQL Server 2008

Another one of those features in Oracle that we do not natively have in SQL Server is interval partitioning, where you can automatically have partitions generated based on an interval automatically generated for you. The interval partitioning is yet again, another form of dynamic partitioning, so the thought was, could this be achieved within SQL Server?

My initial thought would be to use an instead of trigger, which would intercept the incoming values and take action on them if appropriate to extend the partition function. An initial look into the documentation suggests it will not be trivial – the BoL states:

"Additionally, the following Transact-SQL statements are not allowed inside the body of a DML trigger when it is used against the table or view that is the target of the triggering action. CREATE INDEX, ALTER INDEX, DROP INDEX, DBCC REINDEX, ALTER PARTITION FUNCTION, DROP TABLE..."

The issue there being the alter partition function – any response to an incoming piece of data that is not properly covered by the existing partition mechanism will need to alter the partition information. Of course there are still ways around such restrictions but when experimenting, it seems the BoL is not correct to list the ‘ALTER PARTITION FUNCTION’ command in the restrictions.

There are a few caveats to the following snippets of code, I am not attempting to deal with the rolling window affect, or complex storage pattern of a partitioned table of which I have written about in other posts. This is purely designed to demonstrate that it could be achieved, not to provide a total solution to the problem. The partitions created on the fly will all go to the Primary filegroup as well etc.

So start with a very basic partition function and scheme:

CREATE PARTITION FUNCTION pfTest (datetime) AS RANGE LEFT FOR VALUES ('20100104' , '20100111' , '20100118', '20100125') CREATE PARTITION SCHEME psTest AS PARTITION pfTest ALL TO ([PRIMARY])

And generate a table on the partition scheme:

CREATE TABLE IntervalTest ( MyID int identity(1,1) not null, MyField Varchar(200), MyDate datetime ) ON psTest(MyDate)

The next step is an ‘Instead of’ trigger, which has to intercept the incoming data from the inserted table, and extend the partition function if required:

CREATE TRIGGER tr_IntervalTest ON IntervalTest INSTEAD OF INSERT AS BEGIN -- get the current maximum partition value DECLARE @max_part_dt datetime; DECLARE @max_inserted_dt datetime; DECLARE @weeks_to_add int; SET @max_inserted_dt = (SELECT max(MyDate) FROM inserted); SET @max_part_dt = ( SELECT max(convert(datetime,value)) from sys.partition_functions f inner join sys.partition_range_values rv on f.function_id = rv.function_id where name = 'pfTest'); IF (@max_inserted_dt > dateadd(D,7,@max_part_dt)) BEGIN -- need to potentially add multiple partition splits, it depends on -- how many weeks in advance the new data is. -- get a whole number of the weeks to add to ensure that we cover -- the new data SET @weeks_to_add = ceiling(datediff(D,@max_part_dt, @max_inserted_dt) / 7.0) -- loop around splitting the partition function with the new weekly values -- that need to be covered WHILE @weeks_to_add > 0 BEGIN -- increase the maximum partition date by 7 days and split the function SET @max_part_dt = dateadd(D,7,@max_part_dt); ALTER PARTITION SCHEME psTest NEXT USED [Primary]; ALTER PARTITION FUNCTION pfTest() SPLIT RANGE (@max_part_dt); SET @weeks_to_add = @weeks_to_add - 1 END; END; -- finally insert the values INSERT INTO IntervalTest (MyField, MyDate) SELECT MyField, MyDate FROM inserted; END

The code is pretty self-explanatory, but I would point out that it is only covering an insert, not an update – and this is not production code but an experiment to see if it could be done (contrary to the BoL). To ‘productionize’ this would require significant work on exception handling, performance tuning, handling multiple filegroup partitioning, the list goes on, but all of it achievable.

A little test to insert a couple of values set ahead of the partition

insert into IntervalTest (MyField, MyDate) select 'b', '20100505' union select 'c', '20100606'

And a check of the partition function values now show 23 values in the partition function, instead of the original 4 as follows:

2010-01-04 00:00:00.000, 2010-01-11 00:00:00.000, 2010-01-18 00:00:00.000, 2010-01-25 00:00:00.000, 2010-02-01 00:00:00.000, 2010-02-08 00:00:00.000, 2010-02-15 00:00:00.000, 2010-02-22 00:00:00.000, 2010-03-01 00:00:00.000, 2010-03-08 00:00:00.000, 2010-03-15 00:00:00.000, 2010-03-22 00:00:00.000, 2010-03-29 00:00:00.000, 2010-04-05 00:00:00.000, 2010-04-12 00:00:00.000, 2010-04-19 00:00:00.000, 2010-04-26 00:00:00.000, 2010-05-03 00:00:00.000, 2010-05-10 00:00:00.000, 2010-05-17 00:00:00.000, 2010-05-24 00:00:00.000, 2010-05-31 00:00:00.000, 2010-06-07 00:00:00.000

It has clearly created the partitions to cover the data being inserted and then performed that insertion.

So it can be done, but the constant cost of intercepting every insertion and update to provide this kind of dynamic partition is really not ideal, whether it could be made sufficiently efficient to work at the sort of scale that partitioning tends to be used at is debatable. I have it feeling that it would struggle – I would need to be lent a suitably sized server and SAN to test that one and see whether it could be made efficient enough.

Blank Transactions

Busy time with a new addition to the household – sleep is clearly an optional parameter these days, but on to one of those oddities you might see in the transaction log. On many occasions you will see transactions in the log that have no operations, the individual transaction entry just has a LOP_BEGIN_XACT following be a LOP_COMMIT_XACT, with no operations being recorded.

So what causes these?

The immediate thought is a straight:

Begin Transaction Commit Transaction

If you try that and inspect the log, you will notice that it has not added in this mysterious, zero-operation transaction. So that is not the cause.

How about a rolled back transaction? well you should already know that this would not be the answer since the log reserves space ahead during a transaction to record undo operations, due to a rollback. To show that in more detail, given the following simple snippet of SQL:

begin transaction insert into test (name) values ('a') rollback transaction

The log then shows the transaction beginning, performing the insert, and then rolling it back by deleting the record and recording it as an aborted transaction.

LOP_ABORT_XACT LOP_DELETE_ROWS LOP_INSERT_ROWS LOP_BEGIN_XACT

So neither of the first obvious choices are the cause, the reason seems a bit bizarre, but centres around whether any change was attempted but ignored due to being unecessary. Setting up a test table and inserting that single row into it with a value of ‘a’, run the following statement:

begin transaction update test set name = 'a' rollback transaction

Now when you inspect the log, there is a blank transaction, it recorded the start and end of the transaction, but no operations are shown. The same is true if the transaction is rolled back.

If the code is altered slightly to deliberately mean that no modification would occur though, the same does not hold true:

begin transaction update test set name = 'a' where 1 = 0 commit transaction

Clearly the code is designed to make no modifications, so it is not surprising that no entry occurs in the transaction log, to make the test a bit fairer, let’s design the code in a way that it might make a modification, but it doesn’t.

begin transaction update test set name = 'a' where name = 'b' commit transaction

Still no entry in the transaction log.

So the distinction in the logging of these zero-op transactions is whether or not there was matching data to be altered, if a record was found but that the alteration was unnecessary we get a zero-op transaction appear in the log. It does make you wonder, why?

It also means that from an auditing perspective, the attempt to modify the data was not logged, not because it was unsuccessful, but because it was being altered to the values it already had.

Subtle change to ::fn_dblog in SQL 2008

Bizarrely given that transaction log decoding is not really viable as a technique beyond interest, it’s quite surprising how many people visit just to understand or learn how to do it. Either a lot of data is being lost and no backup’s exist – or it fascinates a large number of people.

In either instance, there are sometimes subtle changes can pass you by, and the use of old habits can make life a bit harder. One such recent change that I missed was an alteration to the ::fn_dblog function. I really should have spent some time investigating the new fields that had been added – but with documentation being limited to say the least, it has not been the top priority and my habit is to fire up SQL 2005 when checking something in the transaction log – so I had not noticed them.

One of the changes is the creation of a link between the contents of sys.dm_tran_current_transaction and the transaction log itself. The contents of the view have not altered, but one of the new fields within the output from ::fn_fblog is a new field called Xact_ID – this field contains the same value as the Transaction_ID that is output from the DMV.

Now that is significantly convenient for when you’re poking around in logs trying to understand cause and effect .

A couple of simple stored procedures later to assist in saving and returning that filtered information as follows:

CREATE PROCEDURE sp_store_transaction <AS BEGIN DECLARE @dm_transaction_id bigint SELECT @dm_transaction_id = transaction_id FROM sys.dm_tran_current_transaction IF OBJECT_ID (N'tempdb.dbo.##db_last_transaction') IS NOT NULL DROP TABLE ##db_last_transaction SELECT [transaction id] INTO ##db_last_transaction FROM ::fn_dblog(null,null) WHERE [Xact ID] = @dm_transaction_id <END GO

CREATE PROCEDURE sp_get_last_transaction AS BEGIN SELECT * FROM ::fn_dblog(null,null) WHERE [Transaction ID] = (SELECT [transaction id] FROM ##db_last_transaction) END GO

And that is going to make looking at cause an effect far easier – first procedure you call anywhere within your transaction to store the current transaction_id within the log, and the second to retrieve the values for that stored transaction id. Something like:

BEGIN TRAN UPDATE tbltest SET firstname = 'ab' EXEC sp_store_transaction COMMIT TRAN sp_get_last_transaction

If you are wondering why I did not dump the log records out and only the transaction id that ties them together – if you dumped the records before the transaction committed or rolled back, you would not see the effect of that action – you need to retrieve all the log rows associated to that transaction, after it has finished.

SQL Server Hash Partitioning

It’s been a while since the last post, primarily due to changing jobs and now spending most of my time on Oracle – although it is always good to see the other side of the coin and see what it has to offer, but I won’t be abandoning SQL Server, that is for certain.

One of the more interesting features to me in Oracle is hash partitioning – the ability to create a partition across a defined number of partitions, and then arbitrarily decide which partition the data will go in based on a hashing function. Why would that be handy? SQL Server partitioning is in effect a range partition, in which you define the dividing points on the number line / alphabet – which suits partitions based on a defined number range or date range, but does not suit partitioning of other types such as a GUID.

The merits of such a partition could be debated, since with a decent index in place the benefits of the partition elimination within the query plan can be limited. Regardless of those merits (and I am pretty sure it is not going to be performant at scale, however could SQL Server implement Hash Partitioning? On a side note, this could be considered semi-dynamic partitioning in that the partition is able to cope with additional data outside of the expected range, due to the hash function.

I’ve seen a few articles try and perform hash partitioning by pre-processing the insert statement, prior to insertion into the database, but what about something a bit more native?

To start with, we need to create a partition function and partition schema to support this endeavour, both are pretty easy to construct.

CREATE PARTITION FUNCTION [myPartitionFunction] (int) AS RANGE LEFT FOR VALUES (100,200,300,400,500,600,700,800,900) CREATE PARTITION SCHEME [myPartitionScheme] AS PARTITION [myPartitionFunction] ALL TO ([FG1])

I’ve set up the partition scheme to assign all of the partitions to FG1, just for convenience, it could easily be set to multiple filegroups, and instead of 9 partitions, this could be constructed with 999 partitions.

There are a variety of hashing algorithms and functions, but given the range covered by the partition function, I have chosen to use a very simple modulo on the converted binary of the unique identifier. The only trick here is that we must create the function with schema binding, otherwise SQL will refuse to use the function later on when we persist the column and partition on it.

CREATE FUNCTION GuidHash (@guid_value uniqueidentifier) RETURNS int WITH SCHEMABINDING AS BEGIN RETURN abs(convert(bigint,convert(varbinary,@guid_value))) % 999 END

That is a pretty simple hashing function, but the point is to demonstrate is can be done, not to implement the best hashing algorithm that will give the most even distribution etc. The next step is to create the table, with the persisted column defined using the GuidHash function. If the function is not schema bound, you get an error thrown at this stage.

CREATE TABLE MyTable( MyID UniqueIdentifier not null, SomeField Char(200), PartitionID as dbo.GuidHash(MyId) PERSISTED ) ON myPartitionScheme(PartitionID)

The surprise here is that is accepts the table creation definition – since when would you expect a partitioned table’s column to be a computed column?

Time to put an index on the table, given the data is indexed off a unique identifier, it would not be unusual to place a non-clustered index on the table and to use index-alignment, e.g. place it on the same partitioning scheme.

CREATE NONCLUSTERED INDEX [ix_id] ON [dbo].[MyTable] ( [MyID] ASC, [PartitionID] ASC ) ON [myPartitionScheme]([PartitionID])

Populate the table with some test data:

DECLARE @guid uniqueidentifier SET @guid = newid() INSERT INTO mytable (myid, somefield) VALUES (@guid, 'some text') go 10000

So what happens when we select a single row from our data, for convienience I looked up a value in the table and grabbed the GUID – comparing the two queries side by side,

SELECT * FROM mytable WHERE myid = 'D41CA3AC-06D1-4ACC-ABCA-E67A18245596' SELECT * FROM mytable WHERE (partitionid = dbo.guidhash ('D41CA3AC-06D1-4ACC-ABCA-E67A18245596') and myid = 'D41CA3AC-06D1-4ACC-ABCA-E67A18245596')

The comparison is interesting, in percentage terms, it was 85% to 15% for the batch, the IO Statistics reads:

First Query : Scan count 10, Logical Reads 21 Second Query : Scan count 1, Logical Reads 3

So the hashing has clearly made the query faster – but that just means it was faster than the query that didn’t use the partition key which shouldn’t be too surprising – partition elimination vs checking every partition should win, so all it shows is that partition elimination is occurring. So how does it stack up against a normal table, e.g. have we gained anything? To test we need to put 10k rows into the same table, minus the computed column, index it and perform the same kind of select – all easy stuff so I will not write the code here, the results of a select from a normal table?

Normal Table Query : Scan Count 1, Logical Reads 3

And when run side by side, the SSMS window reports a 50% split of work between the two queries within the batch – which is not surprising given the IO costs were listed as the same – so where is the catch? There is no such thing as a free lunch, and the additional cost here is the CPU to generate the PartitionID value for the hashed GUID, but as a technique to partition based on a GUID, it has some merits.

One final thing that I did notice is that under Simple Parameterization, the GuidHash based query does not parameterize, which would start having detrimental effects on the query cache, once the database was placed under forced parameterization, then the query did parameterize appropriately – so you either want forced parameterization on or to use stored procedures – I would vote for the later there.

As a technique is has some merits, but you have to remember to manually include the PartitionID column within each query and run it through the hashing function – which is not ideal, but manageable.

Immutable Primary Key vs Immutable Clustered Key

It is often said that a primary key should be immutable, and this advice is echoed on a multitude of sites some of which strengthen it to a ‘law’ – but we know with databases that absolutes are rare and it is very difficult to be 100% prescriptive. There is then no mention of the clustering key being immutable alongside it, which strikes me as strange since it is just as important.

What happens within SQL Server to the row if you change the clustered key?

- If you change the clustered key value the row must be physically moved and can result in page splits / fragmentation.

- A change of the clustered key requires all the non-clustered indexes to be updated to reflect the clustering key choice.

And if you change the primary key?

- A change of the primary key, has to be reflected in each of the other tables that use the key as a linking mechanism.

- The primary key must still uniquely identify the row within the table.

Clearly different issues, but why does the primary key immutability get so much attention and not the clustering key? The default behaviour of SQL Server is that the primary key becomes the clustering key, so in essence all 4 points get applied, but you can choose a different primary key to the clustering key.

What sort of expense are we talking about if we allow the clustering key to not be immutable and started altering a row’s clustered key value? To get a better understanding of what is going on under the hood, I’ll construct an example and check the transaction log.

Creating a simple table in SQL and inserting a few rows to set up the test is pretty easy:

CREATE TABLE [dbo].[MyTable]( [MyID] [int] IDENTITY(1,1) NOT NULL, [FirstName] [varchar](20) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [SecondName] [varchar](30) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, CONSTRAINT [PK_Table_1] PRIMARY KEY CLUSTERED ( [FirstName] ASC )WITH (IGNORE_DUP_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY]

Insert a few rows and then issue a simple update statement.

update mytable set firstname = 'TestFirstName', Secondname = 'TestSecondName' where MyID = 2

Inspect the transaction log and it is noticable that the log does not contain a LOP_MODIFY_ROW or LOW_MODIFY_COLUMNS within it, but contains a LOP_DELETE_ROWS and a LOP_INSERT_ROWS. Instead of just modifying the data, SQL has removed the row and reinserted it. A few other items appear with the transaction, LOP_BEGIN_XACT and LOP_COMMIT_XACT as you would expect to start and commit the transaction. There is also a LOP_SET_BITS on the LCX_PFS which is not surprising to see either, since we have potentially affected the free space level of the page the data was inserted into.

That maps to exactly what we expect from a high level logical perspective – the row has to be moved and there is no LOP_MOVE_ROW operation. This results in the row being placed into the transaction log twice, as a before and after.

What happens if we perform an update that does not include the clustered key?

update mytable set Secondname = 'AnotherTestSecondName' where MyID = 3

This time the log only includes 2 entries, the LOP_BEGIN_XACT / LOP_COMMIT_XACT and a single LOP_MODIFY_ROW which is more as you would expect.

Size wise, the transaction log entry length for the first alteration was 96 + 148 + 56 + 160 + 52 = 512 bytes. For the second entry it was only 96 + 144 + 52 = 292. So the alteration used more log space and due to write ahead logging it must be committed to the disk, but the actual difference for a single row does not look too significant.

Well, whilst it does not look significant, you have to remember that the row being modified was very small. As previous examples have shown the LOP_DELETE_ROWS and LOP_INSERT_ROWS include the entire contents of the row being removed / added, so with a larger row the entire contents of the row would be added to the log twice, compared to the simple modification. That would start to get expensive.

So altering the clustering key is clearly expensive for the transaction log in comparison to a normal update, and this example did not have additional non-clustered indexes added to the table, which would also then require even more entries to deal with the removal and re-insertion of the non-clustered index values.

Given a choice I would make both immutable; the primary key shouldn’t be the only one to get special treatment and be designed to be immutable, make the clustering key immutable as well.

SQL Immersion Event – Dublin 2010

I attended the SQL Immersion event last year in Dublin and can honestly say that it was the best training course I have ever attended. The level of detail is phenomenal and the interaction with Paul and Kim is superb. I can not recommend the course heavily enough and anyone who is serious about SQL should make the effort to attend one of these, I would even go as far as to say fund it yourself if you have to. Prodata have not only managed to get Paul and Kim back to run it again, but have also got 2 additional master class courses being scheduled as well. I have a feeling these must be closer to including more information / material from the SQL MCM course, which would be superb, but I will have to check whether the bank balance can handle doing them.

The Immersion event is split again into two tracks, DBA and Developer although both sides of that fence benefit from having a good in-depth understanding of the other, so I would ignore the distinction and go for the full ‘Immersion’, there is no reason that a DBA shouldn’t understand indexes and index tuning in depth, or that a developer shouldn’t have a good understanding of the transaction log and internal structure within SQL. I did the full course before and spent most evenings doing even more stuff and using the day’s material to find out new things, many of which have become topics that I have written about.

Early registration to the courses attracts a 15% discount, but using the promotion code SQLH you will get a 20% discount instead. On the full Immersion course that is a further ~100 euros off the price, which can’t be bad.

The Immersion event is running from the 28th June to 1st July, and registration is here.

The two additional master classes are being run the week after, and these are advertised as being material that is not on the Immersion course, but as mentioned – I’m not entirely sure what that is, and given how much detail is on the immersion course, that is going to have to be some very deep internals stuff.

The performance master class is being run on the 5th and 6th of July, registration is here, whilst the DR master class registration is here. Where’s that cheque book?