Archive

Which User Made That Change?

If you have spent any time tinkering about in the transaction log, you will of already come across a bit of a problem when trying to decide what was done and by whom – the ‘what part’ I have decoded in a few posts, but the ‘whom’ part is a lot harder. As far as I can tell the log only contains the SPID of the user who opened the transaction, and does not give us any indication as to who that user really was.

From an actual investigative perspective this is a bit of a painful exercise, I can see a row was deleted but to find out who / what did that actual deletion I would have to start examining either the SQL Server logs or the Windows Server Logs. The default behaviour of SQL Server security though is to only log failed login attempts so the successful ones will not show up by default – to get those appearing you need to change your SQL Server security settings. You can access these logs from the SQL management studio using either the xp_readerrorlogs or sp_readerrorlogs procedures although the nature of the log and textural values make it difficult to then combine in a set based manner – I can humanly read the values but machine reading them for any purpose is a bit of a pain – there is also the issue that those logs will be cycled – and the old logs could well be completely offline.

So I would prefer an easier solution, keeping a record of the logins within the database regardless of the SQL Server security settings, and being in a form that allows me to use a bit more of a set based solution against it. To start with, we will need a table to store the information available to us during the logon process:

create table master.dbo.spidArchive ( LoginTime datetime2(7) ,SPID integer ,ServerName nvarchar(100) ,LoginName nvarchar(100) ,LoginType nvarchar(100) ,LoginSID nvarchar(100) ,ClientHost nvarchar(100) ,IsPooled tinyint )

The spidArchive table here is created in the master database so that it can cover the connections for any of the databases. You can see we have access to a lot of useful information, not just who executed the command, but from which machine they logged in from. The next step is to get SQL Server to add a row to the table every time a login occurs – from SQL Server 2005 onwards we have had access to DDL triggers as well as DML triggers and have the ability to intercept a number of non-DML events.

create trigger spidLogin on all server after logon as declare @eventdata xml; set @eventdata = EVENTDATA(); INSERT INTO master.dbo.spidArchive ( LoginTime ,SPID ,ServerName ,LoginName ,LoginType ,LoginSID ,ClientHost ,IsPooled ) VALUES ( @eventdata.value('(/EVENT_INSTANCE/PostTime)[1]','datetime2(7)') ,@eventdata.value('(/EVENT_INSTANCE/SPID)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/ServerName)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/LoginName)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/LoginType)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/SID)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/ClientHost)[1]','nvarchar(100)') ,@eventdata.value('(/EVENT_INSTANCE/IsPooled)[1]','tinyint') )

During the login process, the EventData() function returns a fixed format XML fragment from which we can extract the values we seek and simply insert into our spidArchive table. Now we have a log being taken of all connections being established to the server, we can start using this to translate from a SPID to a user, even when the user is no longer connected – as long as we know the SPID and the time, we just need to look for the closest entry in the past for that SPID, and that will indicate which user was currently logged on at the time. This function should go in the master database again.

CREATE FUNCTION dbo.ConvertSpidToName(@SPID integer, @Date datetime2(7)) RETURNS nvarchar(100) AS BEGIN DECLARE @name nvarchar(100) SELECT TOP(1) @name = LoginName FROM master.dbo.spidArchive WHERE SPID = @SPID AND LoginTime <= @Date ORDER BY LoginTime DESC; RETURN @name; END

This function just performs the logic stated above and converts the SPID and DateTime into the login name for the user. Once this infrastructure is in place we can now directly use that in a call to ::fn_dblog(null,null) to translate the SPID column

select master.dbo.ConvertSpidToName(log.SPID, log.[Begin Time]) as UserName, log.* from ::fn_dblog(null,null) log

What you will notice is that for the majority of log lines, there is no user name displayed – this is because the SPID is only recorded against the LOP_BEGIN_XACT entry, the beginning of the transaction. This doesn’t really present a problem, from previous experiments we know all the entries for an individual transaction are given a unique Tansaction ID which we can use to group them together. It becomes pretty trivial to join back to the log, and connect any transaction entries to the LOP_BEGIN_XACT record and produce the name on every row possible.

select master.dbo.ConvertSpidToName(log2.SPID, log2.[Begin Time]) as UserName, log.* from ::fn_dblog(null,null) log left join ::fn_dblog(null,null) log2 on log.[Transaction ID] = log2.[Transaction ID] and log2.Operation = 'LOP_BEGIN_XACT'

So overall it is not too hard to get the log entries attributed to the accounts that generated them.

A couple of final notes / caveats:

- If your application is using a trusted sub-system approach this of course will not work as a technique, since all the users will be logged into the application through an internal mechanism (such as a users table) and then the application service connects using it’s own credentials – always a good thing since then the user’s have no direct access to the database. In that kind of situation this is of no value, every connection will be shown up as the same user/ source.

- Within my code I chose to use datetime2(7), to be as accurate as possible on the connections and timings, you could drop to just datetime for SQL Server 2005 but with only 1/300ths of a second accuracy there is a chance on a very busy server that you could see two entries for a single SPID at the same datetime – which would pose a bit of a problem.

- The spidArchive table can not be allowed to grow unconstrained – I have not included anything here for clearing down the table, but it is not difficult to conceive of it being archived off, or cleaned up weekly via a SQL Agent job.

SQL Internals Viewer

As Captain Oates once said, ‘I am just going outside and may be some time’ – feels like quite a while since I had to time to see down and write something.

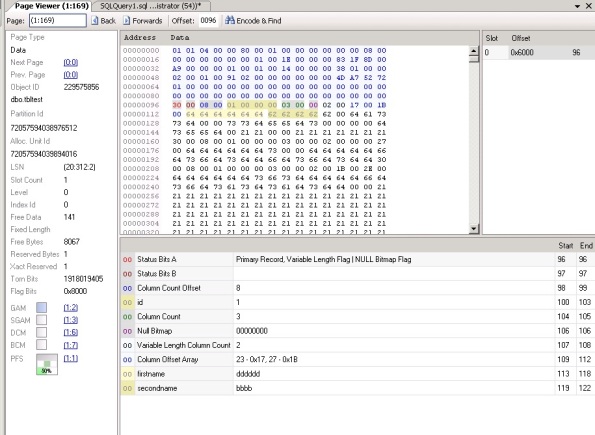

I had a bit of time to take a look at the SQL Internals Viewer (http://internalsviewer.codeplex.com/) , it has been out for some time but I had never downloaded it to play around to see how useful it is in terms of a way of learning more about the internals.

The Page Viewer is excellent, the breakdown of a page into the component parts for a row and the display of the page data is a superb aid to anyone wanting to understand how the data is stored on a page. Whilst you can use DBCC PAGE to get at all this information, presenting it in such a readable form will satisfy most people easily.

The page allocation map is a nice little addition, but really is just an extension of showing you what pages belong to which object etc.

The transaction log viewer though I was really looking forward to seeing, primarily to help me decode more transactions, but it has been a bit disappointing – the level of detail shown is very limited, and provides no real benefit over just looking at the log directly, or using the last transaction log trick I have previously posted.

As you can see from the screenshot, the level of details is pretty light for a simple transaction, no actual breakdown of the log record itself is provided, which is a shame – whilst it does given you some basic information and will help some people, I think if you are at the stage where you are taking an interest in the transaction log, you are already beyond this point.

So as an educational / learning aid, it is pretty good on the page internals side – and anyone wanting an easier way to visualize that for learning it is still worth grabbing. I would love to see more on the Log side – but at present the project appears to be in hibernation, with no changes in some considerable time, so I suspect we will not see any enhancements now.

Blank Transactions

Busy time with a new addition to the household – sleep is clearly an optional parameter these days, but on to one of those oddities you might see in the transaction log. On many occasions you will see transactions in the log that have no operations, the individual transaction entry just has a LOP_BEGIN_XACT following be a LOP_COMMIT_XACT, with no operations being recorded.

So what causes these?

The immediate thought is a straight:

Begin Transaction Commit Transaction

If you try that and inspect the log, you will notice that it has not added in this mysterious, zero-operation transaction. So that is not the cause.

How about a rolled back transaction? well you should already know that this would not be the answer since the log reserves space ahead during a transaction to record undo operations, due to a rollback. To show that in more detail, given the following simple snippet of SQL:

begin transaction insert into test (name) values ('a') rollback transaction

The log then shows the transaction beginning, performing the insert, and then rolling it back by deleting the record and recording it as an aborted transaction.

LOP_ABORT_XACT LOP_DELETE_ROWS LOP_INSERT_ROWS LOP_BEGIN_XACT

So neither of the first obvious choices are the cause, the reason seems a bit bizarre, but centres around whether any change was attempted but ignored due to being unecessary. Setting up a test table and inserting that single row into it with a value of ‘a’, run the following statement:

begin transaction update test set name = 'a' rollback transaction

Now when you inspect the log, there is a blank transaction, it recorded the start and end of the transaction, but no operations are shown. The same is true if the transaction is rolled back.

If the code is altered slightly to deliberately mean that no modification would occur though, the same does not hold true:

begin transaction update test set name = 'a' where 1 = 0 commit transaction

Clearly the code is designed to make no modifications, so it is not surprising that no entry occurs in the transaction log, to make the test a bit fairer, let’s design the code in a way that it might make a modification, but it doesn’t.

begin transaction update test set name = 'a' where name = 'b' commit transaction

Still no entry in the transaction log.

So the distinction in the logging of these zero-op transactions is whether or not there was matching data to be altered, if a record was found but that the alteration was unnecessary we get a zero-op transaction appear in the log. It does make you wonder, why?

It also means that from an auditing perspective, the attempt to modify the data was not logged, not because it was unsuccessful, but because it was being altered to the values it already had.

Subtle change to ::fn_dblog in SQL 2008

Bizarrely given that transaction log decoding is not really viable as a technique beyond interest, it’s quite surprising how many people visit just to understand or learn how to do it. Either a lot of data is being lost and no backup’s exist – or it fascinates a large number of people.

In either instance, there are sometimes subtle changes can pass you by, and the use of old habits can make life a bit harder. One such recent change that I missed was an alteration to the ::fn_dblog function. I really should have spent some time investigating the new fields that had been added – but with documentation being limited to say the least, it has not been the top priority and my habit is to fire up SQL 2005 when checking something in the transaction log – so I had not noticed them.

One of the changes is the creation of a link between the contents of sys.dm_tran_current_transaction and the transaction log itself. The contents of the view have not altered, but one of the new fields within the output from ::fn_fblog is a new field called Xact_ID – this field contains the same value as the Transaction_ID that is output from the DMV.

Now that is significantly convenient for when you’re poking around in logs trying to understand cause and effect .

A couple of simple stored procedures later to assist in saving and returning that filtered information as follows:

CREATE PROCEDURE sp_store_transaction <AS BEGIN DECLARE @dm_transaction_id bigint SELECT @dm_transaction_id = transaction_id FROM sys.dm_tran_current_transaction IF OBJECT_ID (N'tempdb.dbo.##db_last_transaction') IS NOT NULL DROP TABLE ##db_last_transaction SELECT [transaction id] INTO ##db_last_transaction FROM ::fn_dblog(null,null) WHERE [Xact ID] = @dm_transaction_id <END GO

CREATE PROCEDURE sp_get_last_transaction AS BEGIN SELECT * FROM ::fn_dblog(null,null) WHERE [Transaction ID] = (SELECT [transaction id] FROM ##db_last_transaction) END GO

And that is going to make looking at cause an effect far easier – first procedure you call anywhere within your transaction to store the current transaction_id within the log, and the second to retrieve the values for that stored transaction id. Something like:

BEGIN TRAN UPDATE tbltest SET firstname = 'ab' EXEC sp_store_transaction COMMIT TRAN sp_get_last_transaction

If you are wondering why I did not dump the log records out and only the transaction id that ties them together – if you dumped the records before the transaction committed or rolled back, you would not see the effect of that action – you need to retrieve all the log rows associated to that transaction, after it has finished.

Immutable Primary Key vs Immutable Clustered Key

It is often said that a primary key should be immutable, and this advice is echoed on a multitude of sites some of which strengthen it to a ‘law’ – but we know with databases that absolutes are rare and it is very difficult to be 100% prescriptive. There is then no mention of the clustering key being immutable alongside it, which strikes me as strange since it is just as important.

What happens within SQL Server to the row if you change the clustered key?

- If you change the clustered key value the row must be physically moved and can result in page splits / fragmentation.

- A change of the clustered key requires all the non-clustered indexes to be updated to reflect the clustering key choice.

And if you change the primary key?

- A change of the primary key, has to be reflected in each of the other tables that use the key as a linking mechanism.

- The primary key must still uniquely identify the row within the table.

Clearly different issues, but why does the primary key immutability get so much attention and not the clustering key? The default behaviour of SQL Server is that the primary key becomes the clustering key, so in essence all 4 points get applied, but you can choose a different primary key to the clustering key.

What sort of expense are we talking about if we allow the clustering key to not be immutable and started altering a row’s clustered key value? To get a better understanding of what is going on under the hood, I’ll construct an example and check the transaction log.

Creating a simple table in SQL and inserting a few rows to set up the test is pretty easy:

CREATE TABLE [dbo].[MyTable]( [MyID] [int] IDENTITY(1,1) NOT NULL, [FirstName] [varchar](20) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [SecondName] [varchar](30) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, CONSTRAINT [PK_Table_1] PRIMARY KEY CLUSTERED ( [FirstName] ASC )WITH (IGNORE_DUP_KEY = OFF) ON [PRIMARY] ) ON [PRIMARY]

Insert a few rows and then issue a simple update statement.

update mytable set firstname = 'TestFirstName', Secondname = 'TestSecondName' where MyID = 2

Inspect the transaction log and it is noticable that the log does not contain a LOP_MODIFY_ROW or LOW_MODIFY_COLUMNS within it, but contains a LOP_DELETE_ROWS and a LOP_INSERT_ROWS. Instead of just modifying the data, SQL has removed the row and reinserted it. A few other items appear with the transaction, LOP_BEGIN_XACT and LOP_COMMIT_XACT as you would expect to start and commit the transaction. There is also a LOP_SET_BITS on the LCX_PFS which is not surprising to see either, since we have potentially affected the free space level of the page the data was inserted into.

That maps to exactly what we expect from a high level logical perspective – the row has to be moved and there is no LOP_MOVE_ROW operation. This results in the row being placed into the transaction log twice, as a before and after.

What happens if we perform an update that does not include the clustered key?

update mytable set Secondname = 'AnotherTestSecondName' where MyID = 3

This time the log only includes 2 entries, the LOP_BEGIN_XACT / LOP_COMMIT_XACT and a single LOP_MODIFY_ROW which is more as you would expect.

Size wise, the transaction log entry length for the first alteration was 96 + 148 + 56 + 160 + 52 = 512 bytes. For the second entry it was only 96 + 144 + 52 = 292. So the alteration used more log space and due to write ahead logging it must be committed to the disk, but the actual difference for a single row does not look too significant.

Well, whilst it does not look significant, you have to remember that the row being modified was very small. As previous examples have shown the LOP_DELETE_ROWS and LOP_INSERT_ROWS include the entire contents of the row being removed / added, so with a larger row the entire contents of the row would be added to the log twice, compared to the simple modification. That would start to get expensive.

So altering the clustering key is clearly expensive for the transaction log in comparison to a normal update, and this example did not have additional non-clustered indexes added to the table, which would also then require even more entries to deal with the removal and re-insertion of the non-clustered index values.

Given a choice I would make both immutable; the primary key shouldn’t be the only one to get special treatment and be designed to be immutable, make the clustering key immutable as well.

A Strange Case of a Very Large LOP_FORMAT_PAGE

I have been involved in a long running case with a client, trying to establish why an ETL process of a couple of gig of data, would result in over 5 gig of transaction log space being used.

The database was set in bulk mode, and confirmed as being correctly in bulk mode with appropriate full backups and transaction log backups occurring – so how could the log be expanding so rapidly for such a simple import. One clue was that this was only occurring on a single system, and that no other system had ever witnessed this behaviour, so it had to be something environmental.

The output from the transaction log captured during a load window was sent to me and some quick aggregation of the rows show that there was close to 280k LOP_FORMAT_PAGE entries, but instead of the normal log entry size of 84 bytes, they were coming in at 8276 bytes. This was due to the log record overhead but basically meant that for each page being allocated by the inload, an entry was added larger than the page just added.

I contacted Paul Randal to ask why the log entry would get allocated this large and he identified it as not being in bulk logged mode – which made sense given the log expansion but there was little proof. Examining an individual LOP_FORMAT_PAGE entry I could see a clear repeating pattern within the hex data – which would be consistent to a page with rows within it.

One field of what was being imported was a known value for every row (the data related to a specific week number), and translating that value into hex, it was visible multiple times. Using the breakdown of a row and that this value was field number 3 the row could be decoded and actual values of a row imported reconstructed.

Using these values the main database was queried and that row did indeed exist – the data has 27 decimal values within it also, so the chances of a random match are incredibly small. This proved that the log was recording every single row, even though it was in bulk logged mode.

There are a number of things that can prevent bulk logged mode from operating correctly, and on examining the list the problem became clear to the client’s DBA; the main database backup was still running at the same time as the data inload was being scheduled. The bulk log operations have to be fully logged during the backup to ensure that the database can be returned to a consistent state once it has been restored.

It is the only time I have seen the LOP_FORMAT_PAGE taking up this much space in a transaction log, and if anyone else gets stuck on a similar problem, google should at least find something this time.

Decoding a Simple Update Statement Within the Transaction Log

After decoding the delete statement within the transaction log, I thought I would tackle another statement – an update statement, although I am attempting the simple version of the update which is only to update a single value within the fixed width fields section. Again to assist in decoding the log entry, I have used the AdventureWorks database and know the values of the data before and after the modification, so it is easier to decode how the log entry is constructed.

As before the database has been backed up whilst in fully logged mode and the transaction log backed up to make it as small as possible. Once the database was ready I issued a simple statement against the database

update HumanResources.Employee set MaritalStatus = 'M' where employeeid = 6

The employee with the ID of 6 has changed marital status, congratulations employee id 6.

The first confusion is that two LOP_MODIFY_ROW entries show up against the LCX_Clustered context, for the HumanResources.Employee.PK_Employee_EmployeeID allocation unit / name, but only 1 modification has been made.

What is the second modification?

Against each LOP_MODIFY_ROW there is an [Offset in Row] column, which indicates where within the row the modification has been made. The first modification is recorded at byte 24, the second is at byte 57. Instead of providing a field number / name, the alteration is being recorded at a byte level, so to figure out which columns have changed, we must map out the structure in terms of byte positions for the row.

| Offset | Name | Size in Bytes | Value |

| 0 | Status Bits | 2 | |

| 2 | Column Number Offset | 2 | |

| 4 | EmployeeID | 4 | 6 |

| 8 | ContactID | 4 | 1028 |

| 12 | ManagerID | 4 | 109 |

| 16 | BirthDate | 8 | 1965-04-19 00:00:00.000 |

| 24 | MaritalStatus | 2 | S |

| 26 | Gender | 2 | M |

| 28 | HireDate | 8 | 1998-01-20 00:00:00.000 |

| 36 | SalariedFlag | 1 | 1 |

| 37 | VacationHours | 2 | 40 |

| 39 | SickLeaveHours | 2 | 40 |

| 41 | CurrentFlag | 1 | 1 |

| 41 | rowguid | 16 | E87029AA-2CBA-4C03-B948-D83AF0313E28 |

| 57 | ModifiedDate | 8 | 2010-02-20 18:13:03.710 |

| 65 | Column Count | 2 | |

| 67 | Variable Column Count | 2 | 3 |

| 69 | Variable Offets | 6 | |

| 75 | NationalIDNumber | 16 | 24756624 |

| 91 | LoginID | 44 | adventure-works\david0 |

| 135 | Title | 100 | Marketing Manager |

Again, because there are no nullable columns at all, there is no nullability bitmap within the structure. This mapping is specific to this row, but the offsets for the fixed portion of the row would be valid for all the records, it is only the variable width columns that would change per data row.

The two modifications are at byte 24 and 57, which is clearly the Marital Status and the Modified Date.

Why is the modified date being changed? The adventure works employee table has an update trigger on it, which alters the modified data column to be the current date when any change is made to the row.

For the Marital status transaction log entry, there are two values we need to logically know – what was it before the change, and what is the value after the change.

The Row Log Contents 0 and Row Log Contents 1 fields clearly provide that information

Row Log Contents 0 : 0x53 Row Log Contents 1 : 0x4D select convert(nchar,0x53) = S select convert(nchar,0x4D) = M

So they are clearly the before and after results.

Checking the transaction log entry against the trigger, the log row contents are dates within hex format, which are not too difficult to deal with

In Hex, and Row Log Contents 0 contains the previous modified date, although the SQL Management Studio results show the Hex has been shortened somewhat from the normal 8 bytes for a date. Padding the extra zeros in gives us:

0x 00 00 00 00 35 95 00 00 = 0x 00 00 95 35 00 00 00 00 = 2004-07-31 00:00:00.000

The same rules apply to the RowLog Contents 1 which has also been cut short.

0x A5 F1 D6 00 24 9D 00 00 = 00 00 9D 24 00 D6 F1 A5 = 2010-02-21 13:02:35.217

Which is unsurprisingly today’s date and when I made the modification.

The immediate question is did the log contain two entries because there were two different causes of the change, or does it record a transaction log row per value modified. Since the Offset In Row value is within the transaction log row, you could guess at 1 entry per change.

To check, I restored the database to the starting position and issued a statement with two modifications:

update HumanResources.Employee set MaritalStatus = 'M', VacationHours = 60 where employeeid = 6

The transaction log alters signficantly, the modified date LOP_MODIFY_ROW entry with an offset of 57 still gets added, but the alteration of the two values does not produce 2 x LOP_MODIFY_ROW but a single LOP_MODIFY_COLUMNS – this appears to have a more complex format that is going to be tougher to crack, so that will have to wait until another day.

How Do You Decode A Simple Entry in the Transaction Log? (Part 2)

In the previous post we pulled out the raw information relating to a transaction log entry for a deletion of a single row. In this post we will decode the binary section of the log entry for the deletion performed.

A reminder of the Row Log Contents for the Clustered Index log entry that we need to decode.

0x3000410001000000B90400001000000000000000406700004D004D0000000000CB8900004A15001E004AD0E1AA37C27449B4D593524773771800000000359500001000000003005D008500BD003100340034003100370038003000370061006400760065006E0074007500720065002D0077006F0072006B0073005C006700750079003100500072006F00640075006300740069006F006E00200054006500630068006E0069006300690061006E0020002D0020005700430036003000

So we have 180 bytes of information relating to the deletion, the question is – what is it? The most obvious answer has to be the record that has been deleted – lots of fixed log information has already been exposed as named fields, this generic variable length value must be the row that we deleted.

How to decode it though? applying the structure of a row is a good place, the format mentioned before is detailed very nicely detailed in Kalen Delaney’s SQL Internal’s book so let’s use that as a guide.

- 2 Byte : Status Bits

- 2 Bytes: Offset to find number of columns

- X Bytes:Fixed Length Columns

- 2 Bytes: Total Number of Columns in the data row

- 1 Bit per column, Rounded up: Nullability Bitmap

- 2 Bytes:Number of Variable Length Columns within the data row

- 2 Bytes per variable length column : Row Offset marking the end of each variable length column

- X Bytes:Variable Length Columns

Ok, let’s start assigning the values from the log hex dump into the fields.

Status Bits (2 Bytes) : 3000

Offset to Find Number of Columns (2 Bytes) : 4100

Because of the endian ordering in effect, the offset should be read as 0041 – 65 in Decimal. So there must be 61 Bytes of Fixed width data, since we have already used 4 bytes.

Let’s remind ourselves of the table structure:

CREATE TABLE [HumanResources].[Employee]( [EmployeeID] [int] IDENTITY(1,1) NOT NULL, [NationalIDNumber] [nvarchar](15) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [ContactID] [int] NOT NULL, [LoginID] [nvarchar](256) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [ManagerID] [int] NULL, [Title] [nvarchar](50) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [BirthDate] [datetime] NOT NULL, [MaritalStatus] [nchar](1) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [Gender] [nchar](1) COLLATE SQL_Latin1_General_CP1_CI_AS NOT NULL, [HireDate] [datetime] NOT NULL, [SalariedFlag] [dbo].[Flag] NOT NULL CONSTRAINT [DF_Employee_SalariedFlag] DEFAULT ((1)), [VacationHours] [smallint] NOT NULL CONSTRAINT [DF_Employee_VacationHours] DEFAULT ((0)), [SickLeaveHours] [smallint] NOT NULL CONSTRAINT [DF_Employee_SickLeaveHours] DEFAULT ((0)), [CurrentFlag] [dbo].[Flag] NOT NULL CONSTRAINT [DF_Employee_CurrentFlag] DEFAULT ((1)), [rowguid] [uniqueidentifier] ROWGUIDCOL NOT NULL CONSTRAINT [DF_Employee_rowguid] DEFAULT (newid()), [ModifiedDate] [datetime] NOT NULL CONSTRAINT [DF_Employee_ModifiedDate] DEFAULT (getdate()), CONSTRAINT [PK_Employee_EmployeeID] PRIMARY KEY CLUSTERED

The fixed columns are as follows:

[EmployeeID] [int] = 4 [ContactID] [int] = 4 [ManagerID] [int] = 4 [BirthDate] [datetime] = 8 [MaritalStatus] [nchar](1) = 2 [Gender] [nchar](1) = 2 [HireDate] [datetime] = 8 [SalariedFlag] [dbo].[Flag] = 1 [VacationHours] [smallint] = 2 [SickLeaveHours] [smallint] = 2 [CurrentFlag] [dbo].[Flag] = 0 - second bit. [rowguid] [uniqueidentifier] = 16 [ModifiedDate] [datetime] = 8

Adventure Works uses a user defined type of Flag, which is just declared as a bit. So thats 61 bytes of fixed width storage – which is the value we expected.

We need to know the column order, we could assume it is the same as the table declaration, but we should double check the actual colum ordering to be certain.

select colorder, syscolumns.name from syscolumns inner join systypes on syscolumns.xusertype = systypes.xusertype where id =object_id('HumanResources.Employee') and variable = 0 order by colorder colorder name -------- ------------- 1 EmployeeID 3 ContactID 5 ManagerID 7 BirthDate 8 MaritalStatus 9 Gender 10 HireDate 11 SalariedFlag 12 VacationHours 13 SickLeaveHours 14 CurrentFlag 15 rowguid 16 ModifiedDate

So the order looks good, let’s start assigning values:

[EmployeeID] [int] = 01000000 = 00000001 = 1 [ContactID] [int] = B9040000 = 000004B9 = 1209 [ManagerID] [int] = 10000000 = 00000001 = 1 [BirthDate] [datetime] = 0000000040670000 = 0000674000000000 = '1972-05-15 00:00:00.000' - (Use Select convert(datetime, 0x0000674000000000) to check) [MaritalStatus] [nchar](1) = 004D = 77 = UniCode for M (Use Select nchar(77) to check) [Gender] [nchar](1) = 4D00 = 004D = 77 = M [HireDate] [datetime] = 00000000CB890000 = 000089CB00000000 = '1996-07-31 00:00:00.000' [SalariedFlag] [dbo].[Flag] = 4A = 01001010 in Binary, which will need further work. [VacationHours] [smallint] = 1500 = 0015 = 21 [SickLeaveHours] [smallint] = 1E00 = 001E = 30 [CurrentFlag] [dbo].[Flag] = Contained within the First Flag Byte [rowguid] [uniqueidentifier] = 4AD0E1AA37C27449B4D5935247737718 [ModifiedDate] [datetime] = 0000000035950000 = 0000953500000000 = '2004-07-31 00:00:00.000' Number of Columns : 1000 = 0010 - = 16 Columns - which is correct. Nullability Bitmap : 1 bit per Column, so 16/8 = 2 Bytes : 0000 Number of Variable Rows : 0300 = 0003 = 3 Variable Length Columns Var Column End 1 : 5d00 = 005D = 93 Var Column End 2 : 8500 = 0085 = 133 Var Column End 3 : BD00 = 00BD = 189

The number of columns was at the offset of 65, that used 2 bytes, the nullability was 2, the variable number of rows was 2 bytes and each variable row pointer used a further 2 each, so the data starts at byte after e.g. 65 + 12 = 77. First variable width data ends at 93, so the first variable width column is using 16 bytes of space.

I should mention why the nullability bitmap was 2, all the columns are declared as not null, so the bitmap did not increase in size, if there was a single nullable column, then the bitmap would have a bit per column in the table – not a bit per nullable column, it is an all or nothing approach.

Raw Values : 3100 3400 3400 3100 3700 3800 3000 3700 Reverse Bytes : 0031 0034 0034 0031 0037 0038 0030 0037 Result : 14417807 (You can use Select NChar(0x0031) etc to check each value.)

Next up is 93 to 133, a gap of 40 bytes.

Raw Values : 6100 6400 7600 6500 6E00 7400 7500 7200 6500 2D00 7700 6F00 7200 6B00 7300 5C00 6700 7500 7900 3100 Reverse Bytes : 0061 0064 0076 0065 006E 0074 0075 0072 0065 002D 0077 006F 0072 006B 0073 005C 0067 0075 0079 0031 Output : adventure-works\guy1

Final column, should be 189 – 133 = 56 bytes long, which it is, and decoding it again in the same way gives the following.

Raw Values : 5000 7200 6F00 6400 7500 6300 7400 6900 6F00 6E00 2000 5400 6500 6300 6800 6E00 6900 6300 6900 6100 6E00 2000 2D00 2000 5700 4300 3600 3000 Reverse Bytes : 0050 0072 006F 0064 0075 0063 0074 0069 006F 006E 0020 0054 0065 0063 0068 006E 0069 0063 0069 0061 006E 0020 002D 0020 0057 0043 0036 0030 Result : Production Technician - WC60

That is the row contents decoded, and the deleted row’s original values are now exposed.

As you can see from the complexity of decoding one part of a trivial delete operation within the log, this is not something that you would wish to rely on / use as an audit trail etc. But hopefully it has provided some insight and added to the minimal documentation on how to approach the decoding process. I will try to decode more of the operations as, insert and update shouldn’t be too hard to manage, but sucess is not guarenteed.

How Do You Decode A Simple Entry in the Transaction Log? (Part 1)

There is always a lot of interest in reading log records within SQL, although when you start getting into it you soon realise that it is an undocumented quagmire and quickly decide that there are better things you can do with your time.

It has taken a non-trivial amount of time to decode and has created a long documented process, so it will be in two parts, so I should caveat this undertaking with two clear statements first:

- There is no real reason you want to go poking around in the log except for ‘interest value’ – if you need to actually properly read log files then as you are about to see, using commercial applications will look very cheap in comparison to the time you would spend trying to do this. Even better – make sure you have proper backup’s so that you never need to try retrieve rows from the file!

- There is basically very little external documentation to guide you in reading an entry, I am learning it by doing it, and using what little documentation there is, along with trying some logical deductions and good understanding of internals from training – it is designed to act as an example of figuring the log entry out, but also demonstrating that this is not trivial and not something you will want to do.

That said, let’s start by trying to work out a single log record.

Using the adventure works example database, I have set it into fully logged mode and taken a backup to ensure that the transaction log is being used correctly. I’ve removed the foreign key constraints and triggers on the HumanResources.EmployeeTable, since I want to be able to delete a row and not trip over the example FK’s and trigger that prevents deletion.

To get to the log file values out, I am using the following snippet of SQL:

Select * from ::fn_dblog(null,null)

On initial inspection the log has only a not too many rows, altering the Diff Map, File Header etc. So I issue the command to delete a single row from the table.

Delete from HumanResources.Employee where EmployeeId = 1

And then select the contents of the log, and I have copied some of the fields from the output:

| Current LSN | Operation | Context | Transaction ID | Log Record Length | AllocUnitName |

|---|---|---|---|---|---|

| 000001bd:000001d8:0002 | LOP_BEGIN_XACT | LCX_NULL | 0000:0000da58 | 96 | NULL |

| 000001bd:000001d8:0003 | LOP_DELETE_ROWS | LCX_MARK_AS_GHOST | 0000:0000da58 | 148 | HumanResources.Employee.AK_Employee_LoginID |

| 000001bd:000001d8:0004 | LOP_SET_BITS | LCX_DIFF_MAP | 0000:00000000 | 56 | Unknown Alloc Unit |

| 000001bd:000001d8:0005 | LOP_DELETE_ROWS | LCX_MARK_AS_GHOST | 0000:0000da58 | 124 | HumanResources.Employee.AK_Employee_NationalIDNumber |

| 000001bd:000001d8:0006 | LOP_SET_BITS | LCX_DIFF_MAP | 0000:00000000 | 56 | Unknown Alloc Unit |

| 000001bd:000001d8:0007 | LOP_DELETE_ROWS | LCX_MARK_AS_GHOST | 0000:0000da58 | 120 | HumanResources.Employee.AK_Employee_rowguid |

| 000001bd:000001d8:0008 | LOP_DELETE_ROWS | LCX_MARK_AS_GHOST | 0000:0000da58 | 108 | HumanResources.Employee.IX_Employee_ManagerID |

| 000001bd:000001d8:0009 | LOP_SET_BITS | LCX_DIFF_MAP | 0000:00000000 | 56 | Unknown Alloc Unit |

| 000001bd:000001d8:000a | LOP_DELETE_ROWS | LCX_MARK_AS_GHOST | 0000:0000da58 | 288 | HumanResources.Employee.PK_Employee_EmployeeID |

| 000001bd:000001d8:000b | LOP_SET_BITS | LCX_PFS | 0000:00000000 | 56 | HumanResources.Employee.PK_Employee_EmployeeID |

| 000001bd:000001d8:000c | LOP_COMMIT_XACT | LCX_NULL | 0000:0000da58 | 52 | NULL |

That’s a lot of entries for a single delete – which is explained when you check the AllocUnitName, the delete has to also delete the entries within the indexes and the Adventure works table I am working against does indeed have 5 indexes, 4 Non-clustered and 1 Clustered. So that is making a lot of sense, and the whole operation is surrounded by a LOP_BEGIN_XACT and LOP_COMMIT_XACT, and we know from normal SQL terminology with SET XACT ABORT ON / OFF that it is about transactional scope and whether a whole transaction rolls back if a single item within the statement.

Let’s concentrate on the record deletion for the clustered index, with the larger log length of 288. That is a long record for what is in theory marking the row as a ghost, which suggests the row is within the log record. This is also backed up by the differing lengths for the other ghost record marks, which differ in size, just as the index row size does.

What are the interesting fields available to us from the Log record that we can pick up on straight away:

- Transaction ID : All the deletions belong to the same transaction ID, so we know they are related / part of the same transactional operation.

- Previous LSN : Each subsequent deletion in the list, shows the previous one as the previous LSN, so we can see the chain of log records.

- Page ID : We can tell which page was altered.

- Slot ID : Which slot within the page was altered.

- SPID : The LOP_BEGIN_XACT row shows the SPID that issued the command

- BeginTime : The LOP_BEGIN_XACT row shows the DateTime when the command was issued.

- Transaction Name : The LOP_BEGIN_XACT row shows the type of transaction, for this one shows DELETE.

- EndTime : The LOP_COMMIT_XACT row shows the end time of the transaction.

Interestingly, there is no indication as to who issued the command, there is a TransactionSID – which might turn out to be the account SID, but that will be a tangental investigation.

The final column to have a look at is RowLogContents, for the clustered index row deletion, rowlog contents 0 has the larger set of data to work on.

0x3000410001000000B90400001000000000000000406700004D004D0000000000CB8900004A15001E004AD0E1AA37C27449B4D593524773771800000000359500001000000003005D008500BD003100340034003100370038003000370061006400760065006E0074007500720065002D0077006F0072006B0073005C006700750079003100500072006F00640075006300740069006F006E00200054006500630068006E0069006300690061006E0020002D0020005700430036003000

If that does make you go running for the hills, then you have a chance of decoding it.

That’s only 180 Bytes, but this is the log row contents, not the actual log entry itself, so the fixed log fields are not within it, let’s work on it. The structure of a row is a good place, since we know expect the structure of the row to be present within the log. The format is very nicely detailed in Kalen Delaney’s SQL Internal’s book so let’s use that.

- 2 Byte : Status Bits

- 2 Bytes: Offset to find number of columns

- X Bytes:Fixed Length Columns

- 2 Bytes: Total Number of Columns in the data row

- 1 Bit per column, Rounded up: Nullability Bitmap

- 2 Bytes:Number of Variable Length Columns within the data row

- 2 Bytes per variable length column : Row Offset marking the end of each variable length column

- X Bytes:Variable Length Columns

We can use this in Part 2 to decode the binary contents of the log record.