Archive

Oracle : ambiguous join statements being accepted and executed

I came across a weird piece of SQL earlier today where my first reaction was “That is just invalid SQL”, except that I was viewing it in OEM so it clearly had been parsed and executed. Perhaps it is not too bizarre or surprising to some, but because the form of the SQL is something I would not write due to the inherent problem within it that I didn’t expect Oracle to behave as it did.

In abstract form the statement is as follows:

select *

from a

full outer join b on a.id = b.id

inner join c on a.id = c.id

Perhaps that seems innocent enough but its ambiguous and non-resolvable. To demonstrate that let’s consider giving some minor data to A,B and C.

A - records with ID = 1,2 and 3.

B - records with ID = 1,2 and 4.

C - records with ID = 1 and 2.

If A joins to C, we resolve down via the inner join to just IDs of 1 and 2 – and then full outer join to B getting to a final result of 1,2,4.

If A joins to B first, we get rows with 1,2,3,4 – and then the inner join to C getting a final result of 1,2.

This is why as soon as I saw the statement I considered it invalid – its ambiguous and if I was to treat SQL correctly as a declarative language, there is no notion within that statement as it stands to know which would be the correct answer.

So that is so far theory crafting – so let’s put this into some real simple examples of code.

with a as

(

select 1 as id from dual

union

select 2 as id from dual

union

select 3 as id from dual

)

, b as (

select 1 as id from dual

union

select 2 as id from dual

union

select 4 as id from dual

)

, c as (

select 1 as id from dual

union

select 2 as id from dual

)

select a.id as a_id, b.id as b_id, c.id as c_id

from a

full outer join b on a.id = b.id

inner join c on a.id = c.id

The results:

>A_ID B_ID C_ID

>---------- ---------- ----------

>1 1 1

>2 2 2

So that looks clear, it performs the outer join, and then the inner join – seems straight forward? but if this is properly being treated as declarative as it should be then we still know that there is an ambiguity in the statement, but Oracle has ignored that and applied a rule to make the decision for you. I already have an issue with that approach since there is no indication that it had to step in and correct an ambiguity in the statement submitted.

Normally in SQL we know that changing the order of the joins should not make a difference in the result – it can change the explain plan for the query, especially with an ordered hint in place, but the results should be the same – so let’s test that concept on this.

with a as

(

select 1 as id from dual

union

select 2 as id from dual

union

select 3 as id from dual

)

, b as (

select 1 as id from dual

union

select 2 as id from dual

union

select 4 as id from dual

)

, c as (

select 1 as id from dual

union

select 2 as id from dual

)

select a.id as a_id, b.id as b_id, c.id as c_id

from a

inner join c on a.id = c.id

full outer join b on a.id = b.id

All that has changed is the inner join is moved above the full outer join – but that is the limit of the difference. The results:

>A_ID B_ID C_ID

>---------- ---------- ----------

>1 1 1

>2 2 2

> 4

Now, in my view of the SQL world, this is just incorrect – the statement was ambiguous to start with and should not of been permitted to be executed, but rejected and an exception thrown. It should be treated as a declarative language and no inference from the order of the joins should be made.

Whats surprising to me is that it accepts, parses and executes the statement with no warnings or declaration of what it is doing, and then to make matters worse it is not consistent in the result, but bases the result on an inference which is going to be somewhat random on the style of the developer writing the SQL. I would normally say that the order of joins on a statement does not affect the final output – evidently that is not entirely true.

SQL Server Denali – Paging

The introduction of paging within SQL Server Denali will have made a significant number of developers happy, all of which will of previously created home-baked solutions to the same problem. All the solutions have the same underlying problem – paging is by its nature is inefficient. Most solutions use the row number analytic function, and then sub-select data from that. For a large dataset that presents a problem – the data has to be fully scanned, sorted and allocated row numbers. A suitable index can eliminate the sort operator, but you still end up scanning the entire index to allocate the row numbers.

In Denali, we can see that they have added support to the Order By clause, to include a starting offsets and syntax to denote how many rows we wish to return. The syntax can be examined on MSDN (http://msdn.microsoft.com/en-us/library/ms188385%28v=SQL.110%29.aspx#Offset) and in brief is:

ORDER BY order_by_expression

[ COLLATE collation_name ]

[ ASC | DESC ]

[ ,...n ]

[ <offset_fetch> ]

<offset_fetch> ::=

{

OFFSET { integer_constant | offset_row_count_expression } { ROW | ROWS }

[

FETCH { FIRST | NEXT } {integer_constant | fetch_row_count_expression }

{ ROW | ROWS } ONLY

]

}

Seeing this new syntax, made me want to try it out and see how the query plans are affected. I am using the trusty Adventure Works as usual – a version for Denali has been put on codeplex, so one quick download later and I was ready to test the new syntax. (Adventure Works download : http://msftdbprodsamples.codeplex.com/releases/view/55330 )

For my tests, I used the production.product table, and wished to page the products based on their name. There is a non-clustered index on the Name field of the product table as well as a clustered index on the product_id, so what would the query plan give?

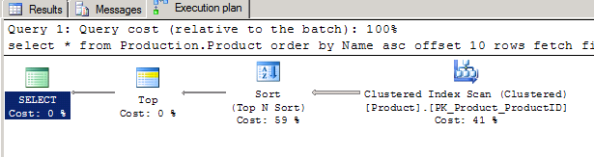

select * from Production.Product order by name asc offset 10 rows fetch first 10 rows only

And the query plan is not very surprising

So even with a new syntax the underlying problem remains, the nature of paging is that you are scanning the data, with statistics io turned on the stats come back with Table ‘Product’. Scan count 1, logical reads 15 etc. not particularly exciting and what we would expect given the table is contained within 15 pages. It was because of the stats though that I noticed an anomaly, in one of the tests, I had dropped to returning only a single row from the table as follows:

select * from Production.Product order by name asc offset 10 rows fetch first 1 rows only

What I noticed was that the statistics changed to Table ‘Product’. Scan count 1, logical reads 24 – the entire table is contained within 15 pages, so how could it jump to reading 24?

A quick check of the query plan showed what has changed, the engine decided that it was cheaper to use the Name index, which for the purposes of the ordering was narrower and therefore more efficient, and then join back to the main table via the clustered key. Understandable, although the additional pages read is unlikely to make this more efficient, but I doubt you would see much real world difference. An oddity, but nothing really significant in it.

This triggered a more interesting thought, what happens if we reduce our fields so that the index is considered a covering index? is SQL going to get smart when making a selection – so far we have only seen full table scans occurring.

select Name, ProductID from Production.Product order by name asc offset 20 rows fetch first 10 rows only

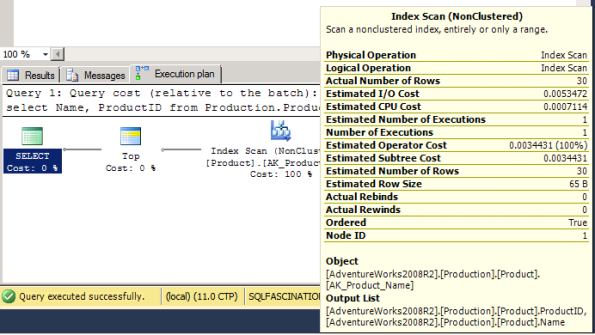

The query is now being covered by the name index since the non-clustered index includes the clustered key (ProductID) – and this changes the query plan again, although its pretty subtle change to notice.

The expected index scan appears, but if you look closely at the tooltip for the scan, the number of rows being read in the scan is not the total number of rows in the index, but a product of the offset + the number of rows requested. This was also reflected within the statistics, showing only 2 logical reads – the index uses 6 pages in total. As I changed the number of rows to offset / return the Actual number of rows read changed accordingly. o with a covering index in place, the query engine gets a bit more efficient and does a forward scan of the index until the point at which we have passed a sufficient number of rows. This sounds good – we have avoided scanning the whole index to provide the paged results in a slightly more efficient manner.

Except those with a quick mind will realise that the performance degrades as you go further and further down the list, requesting the 490-500th products will results in 500 rows being checked, not 30. By putting in a covering index we have sacrificed consistency on query times to gain some potential performance – the full scans solutions will broadly speaking take the same time regardless of which 10 rows you might be requesting, since it has to scan, sort, allocate numbers and then sub-select.

As features go, I like the paging – it removes the need for all the different homegrown solutions that are out there, but the performance of it remains a problem – this is no silver bullet to paging performance problems that people have.