Archive

Guidance on How to Layout a Partitioned Table across Filegroups

SQL Server’s Table Partitioning was one of my favourite features within the 2005 release. It really brought SQL into the mainstream when it came to holding very large data quantities and allowed us to talk with confidence about large tables containing ‘Billions’ of rows and not be limited to the ‘Millions’. From extensive use and a couple of SQL Labs specifically surrounding the partitioning feature there are some rules and best practises I use to try maximise the benefit / flexibility of the partitioned table, without causing uneccesary drops in performance or increases in the disk space requirements.

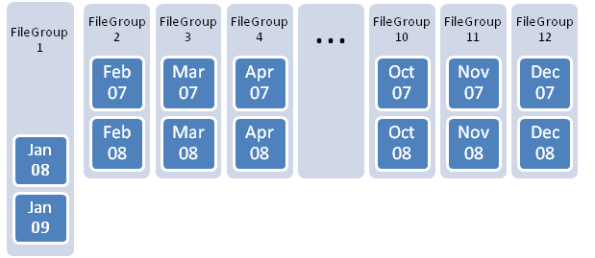

Many examples of table partitions focus heavily on using date range based partition functions and schema, with a common layout mechanism of stripping the weeks / months across a number of file groups. The file groups are re-used and in the example picture, you can see 2 years worth of data stripped across 12 filegroups.

This is pretty common and has an alluring charm of simplicity, but it is going to hurt when you start rolling the window. The underlying problem is that there is no gap.

For a good number of systems it would be unacceptable to remove a month of data unless you had already inserted the new month sucessfully. So the system starts to roll a new month of data in, and is required to use the same filegroups, the layout transforms into the following:

The file group has to expand by 50% to accomodate the new data, before the old data can be removed – and once the old data is removed the filegroups now look like:

So the 50% of space is now wasted unless you use a shrink, which is probably the worst thing you can do to your filegroup and data files at that point in time. Shrink can fragment the data to the extreme and is to be avoided at all costs. Which means you will have to maintain a 50% space penalty for the layout on every filegroup. That might not sound a lot, but on a large database in an enterprise with mirrored SAN’s,that additional 50% is going to cost a substancial amount.

There are also other issues, SQL allows you to backup at a filegroup level and since the bulk of the data is historic and will not alter, you are forced to re-back up historic data (Jan 08) when you backup the recently inserted Jan 09 data. So there is an impact on backup space, backup times and restore times.

The simplicity of the initial layout makes it seem like a good idea, but the side-effects are not pleasant. You can alter the layout and choose to have 6 Filegroups, each storing 4 months of data, and then the expansion is only from 4 to 5, so a 25% overhead. It is better, but still is a cost overhead, The ultimate extreme is to then place it all in one filegroup, but there are a number of difficulties and contention points with that.

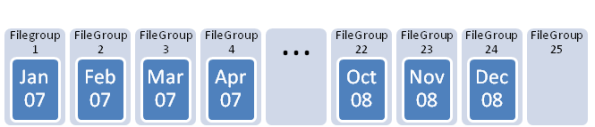

A better approach is to use a different file group per month, but then also create an additional spare filegroup, so that no filegroup is forced to expand, as shown:

The difference here is that we have one free filegroup that is not a part of the current partition scheme / function definition, but will be allocated as the ‘Next Used’ filegroup for the partition scheme, so that when we split the partition function that filegroup is brought into the scheme and used. The old data that is going to be removed, will provide an empty filegroup that will be used for the next month’s import. In essence the head of the partition function is chasing the tail and will never catch up.

The expansion of size for this is 1/n where n is the number of partitions to be stored, so for our 24 month example 1/24th expansion – a considerable saving. Even better is that you can choose to utilise file group backups for the older static months of data.

This though is not perfect for a couple of reasons, primarily data loading and indexing. To make the ‘scene’ better there should be a further filegroup dedicated to the data staging / loading that is going to occur to bring the data into the partitioned table.

The purpose of this is twofold:

- The disks used for loading are seperate from the disks used for the actual data so that we can maintain Quality of Service on the main table.

- The data wants to be loaded as a heap for speed, but is likely to be sorted prior to insertion, an in place sort would expand one of the main file groups which is to be avoided.

By loading into a staging filegroup, you give yourself the opportunity to then use a clustered index to sort the data and move it to the appropriate file group, prior to being constrained and switched in to the main partitioned table. If you had loaded the data into FG25 and then tried to apply a clustered index, it would of doubled the size of the filegroup again as it needs to write all the new rows out and commit them, before the heap could be deleted. That would put you back at square one wasting 50% of the disk space.

The staging filegroup does cost us another file group worth of space, so the 24 initial filegroups has grown to 26, which is still a smaller expansion than the potential 50%.

So some simple guidlines are:

- For a partition function that is going to have N partitions, create N+1 Filegroups.

- ETL / Stage your data into a dedicated staging file group

- Move your data using a clustered index creation to the ‘spare’ filegroup you always have.

- Switch new data in, then switch old out creating the new ‘spare’

It is not entirely flawless however – there is a problem in using such a design if the quantity of data per partition is going to vary by a large amount; you then have to provision each filegroup to have enough space to cope with the fluctations, which in itself can result in wasted space and starts chipping away at the gains made.

It works best as a technique on datasets that remain relatively consistent in terms of the number of rows per partition and as the number of partitions goes up, the savings increase.